Goodbye MegaMek?

A disappointing update on one of the BattleTech community's beloved fan projects

This is going to be another unhappy issue in an unhappy year. If you look to BattleTech as a way to get away from real world garbage… I’m never going to say that you shouldn’t read something of mine, but I understand if you skip this one. For everyone else – look, I don’t have anything cute for the intro. Let’s dive in.

What Happened?

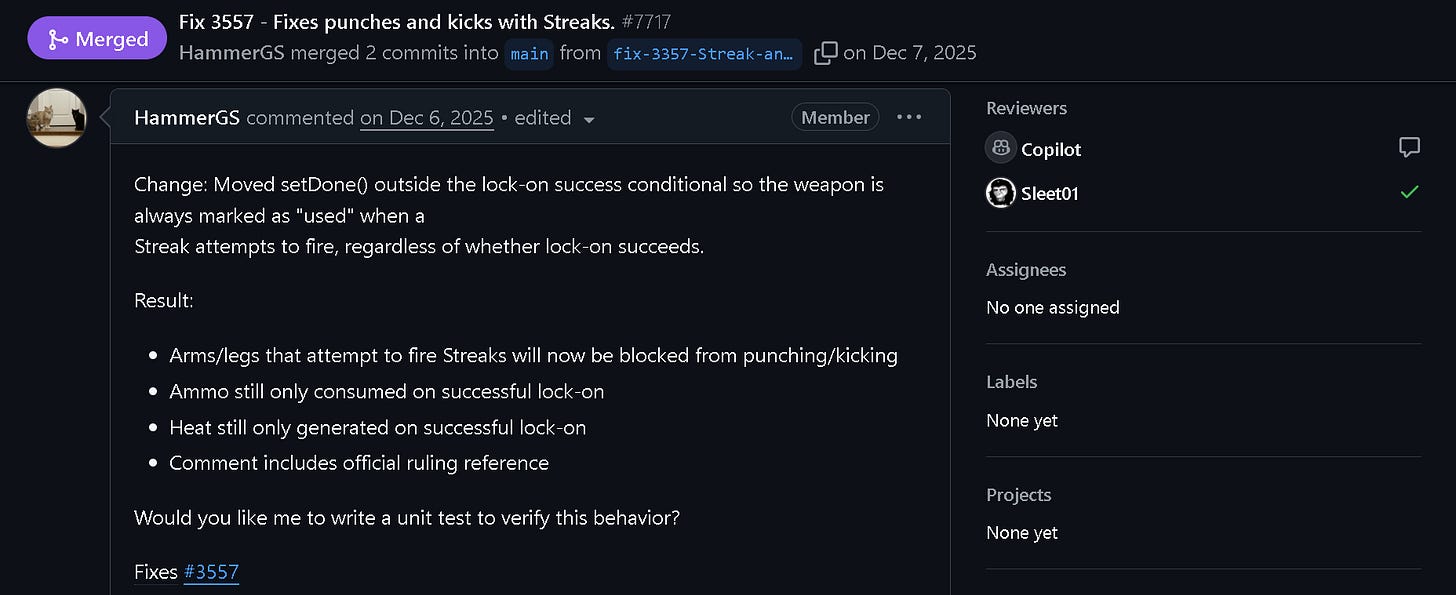

Many readers will remember me making favorable reference to MegaMek, a suite of open source applications for playing digital BattleTech. I’m not retracting any of those remarks, though a lot would have to change to get me to make any more. That’s because last week, when I checked in on the development page for the MekHQ (campaign manager) part of MegaMek, this was waiting:

There were five entries with the AI Generated Code tag, the first of them pushed in November 2025. That didn’t feel great, but checking the main MegaMek application turns up (at the time of writing) eighty-five “AI generated fix” changes accepted into the project and there are seven accepted changes tagged as “AI Generated Code” for the MegaMekLab app. And unfortunately for those taking comfort from the “fix” language in the MegaMek data, that label is somewhat misleading – many of these changes implement whole new features. Worse yet, a couple of other change entries looked a lot like they were AI written and got added to MegaMek without being labeled as such. I’m sure that this was just a slip of the keys, but it’s concerning that “HammerGS”, the project leader, submitted what looks pretty plainly like AI-generated work without the label. Are other team members better at following standards?

The Problems with AI

We don’t have time for a full review of the criticism of AI doing the rounds these days, so (with some apology) I’m largely going to set the context with links. Last year, we saw the first court ruling showing that the development of generative AI violated copyright and research published early this year has revealed that what AI does might be little more than copying. Environmental concerns about the explosion of AI infrastructure have gone mainstream. And then there’s just… the things going on with the Grok AI. Now, some people say that a lot of the problems here are how the technology is being used, not that it’s being used at all. There’s optimism about scientific applications using AI pattern recognition to assist human intelligence rather than replace it and such uses are the standard example of “good AI”. (To be clear, talk about usefulness, limitations, and concerns with AI isn’t new. Dr. Collier gave a pretty good explanation of a lot of this back in 2023.)

I won’t ask you to accept that the scientific uses of AI are “good”, though I hope that they at least come across as “less bad” when used carefully. With that in mind, where do we place the MegaMek team’s use of AI tools on a scale from the Grok AI making deepfakes to machine learning tools doing menial work for astrophysicists? Maybe I’m stuck in the bargaining stage of grief here, but I’m inclined to be generous to them because in some ways generative AI in software development is closer to scientific work than creative work.

Think about it this way: generative AI in visual arts is sharply different to a human being making the piece. There is such a thing as digital art which uses computer tools to develop an image, but even that is nothing like entering a prompt and deciding whether you like the results. But with programming, there have long been tools that essentially check the grammar of the code. It’s also common practice to look at solutions others have used to solve a programming problem and apply them to get to the desired result. Programmers might feel like going from looking up a solution and implementing it to asking an AI to generate the code is just cutting out a menial step. Past that, we have a case that looks a lot like what physicists do: applying machine learning tools to menial coding work, such as switching data from one file format to another.

But for me, there is a real problem with using AI to create the code in the first place. While concerns over this practice are mainstream (Valve has required disclosure of AI content for games going onto their Steam platform for two years now) my own view’s probably a “writer biased” position. If people are using AI as a spelling and grammar checker – well, I might try to talk them out of it, but at no point am I going to say they’re not writing. On the other hand, if generative AI is making the text in the first place? Well, Michael Stackpole said it better than I can:

In the same way, “vibe coding” using AI prompts doesn’t make someone a programmer and, in particular, it doesn’t mean that they understand what they’re doing – and that can lead to problems.

MegaMek’s AI Problem

OK, I might have showered you with too many links and concepts there. Let’s just go over the record of AI being used in MegaMek and focus on the specific issues that arise in that story. As best I can tell, they started using the Copilot AI tool to review their code a few years ago. To me, that’s analogous to AI grammar-checking. I’m not sure if there were other tools that could fill the gap, I’d rather they went with another option, but there’s no question that at that point, the creative work was being done by the MegaMek volunteers.

In a February 2025 update to the MegaMek blog (archived copy), the MegaMek team announced that:

we’ve had success converting XML to YAML by using the various AI tools (ChatGPT, Claude.ai,Gemini). We started by showing it a sample of the final YAML file then giving them the system data from the XML file and asking for it convert the information

That’s a little technical but basically they were using generative AI to update their database into a file format that was more useful for development going forward. Doing that kind of work by hand is tedious and prone to error. Again, I might prefer that they use another tool, but the MegaMek team could fairly say that AI played no creative part in the process. There was an update on the file format change in an August 2025 blog post. Here, I want to draw your attention to this line (and especially to the text I’ve emphasized):

While I know AI tools are controversial to some, ChatGPT, Co-Pilot, Claude.ai, and Gemini have all been successfully tested for XML-to-YAML conversions.

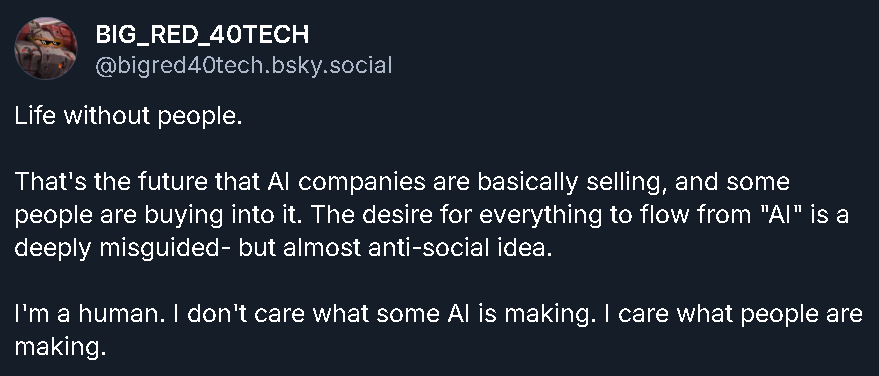

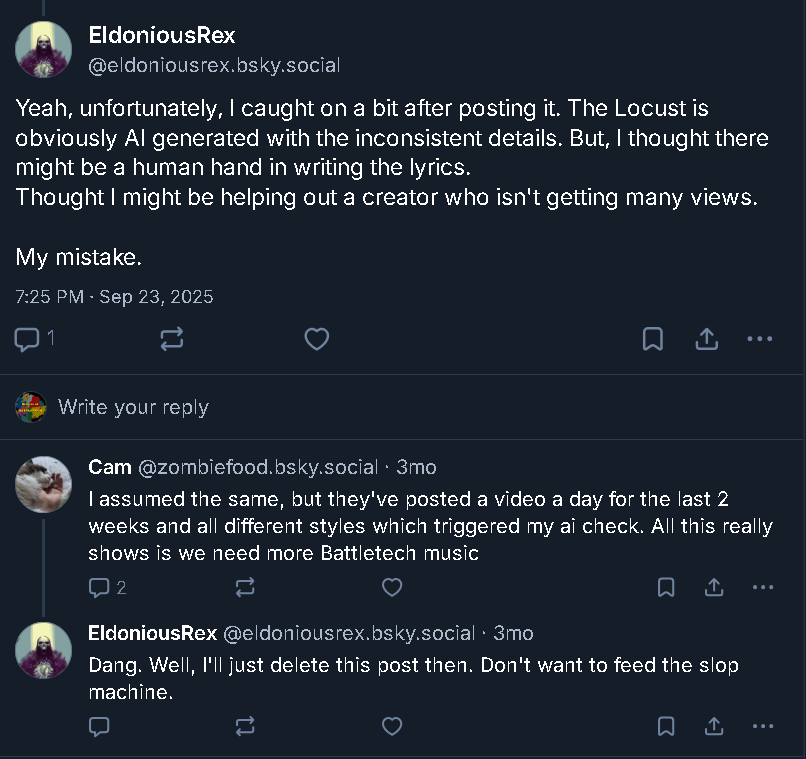

Until I saw that, the plan was to say that the MegaMek team had “failed to read the room”. It would have been easy to give a sample of folks in and around the BattleTech community (including contributors to MegaMek) who’ve made public statements expressing negative views on AI…

… and after showing those views, I’d say something like, “I’m not sure how the MegaMek team missed these sentiments, but people lead busy lives so perhaps they did.” From there, this could be treated like some kind of terrible mistake.

But the MegaMek team knew that this was going to be unpopular. Maybe the notes for 0.50.10 (archived copy) didn’t mention the use of generative AI in the development because it was still new. I could believe that. But then for the release of 0.50.11 (archived copy) there’s still no mention of AI code going into the project. Well, mistakes happen, don’t they? I just feel like we crossed a bridge when the State of the Universe 2026 (archived copy) specifically thanked contributors to the project – and for some reason didn’t mention the Claude AI that’s been producing large chunks of code.

Let’s be clear here: many people would probably carry on with MegaMek even if it was clearly labeled as produced with the assistance of generative AI. I’m also not accusing the MegaMek team of a serious attempt to conceal what they were doing. I didn’t hack anything to find those AI labels. But the team knew that AI is “controversial” and chose to be less than candid with their audience. That’s a breach of trust. Something like that makes it very hard to interpret the team’s actions charitably.

Why?

There’s a lot about this that I don’t get. When it comes to not informing their audience, the MegaMek team don’t even have the crass justification of a for-profit entity. Their day jobs would go unaffected if nobody ever downloaded a new version. They would still be just as able to pay their bills if nobody ever played MegaMek again. I could speculate about the decision-making process the way I would with fictional characters – but in contrast to people like Victor Steiner-Davion, there really is a fact of the matter as to what the MegaMek team believes.

So, let me just move my incomprehension to another angle here. Why would they even want a machine to do their hobby? This is a team of volunteers. My impression is that they put their time into MegaMek out of love of BattleTech, but also for the enjoyment of the development process. I’ve dabbled in modding and development projects and while there are frustrations along the road it is pretty satisfying to solve a problem. And listen, my ADHD brain is not wired to take satisfaction from doing things. (When I finish an issue of this newsletter, my typical feeling would be best described as “mild relief”.) Solving code problems probably feels even better for more typical people.

Still, there might be a partial explanation in a line from the 2026 State of the Universe blog post. Hammer writes:

I still look forward to the day when I can play Operation LIBERATION—from space to surface—as a campaign.

See, if you look at what Hammer was committing to the project before the AI-created “fixes” – well, I’m sure that a lot more was being done with the database, as well as management, planning, and coordination work. All of that’s really important. But it looks a lot like Hammer doesn’t have much of a background in Java, the language used for MegaMek, and for whatever reason (probably time commitments?) didn’t learn it along the way. I can only imagine the frustration of dreaming about some end state for the project while the “to do” list never shrinks. Last year, Catalyst Game Labs might have even added to it with the release of BattleTech Gothic, the first of a series of alternate BattleTech universes with new rules and options. In September 2025, CGL also started a playtest process to inform a new version of the core BattleTech rules. Perhaps there was a temptation to use generative AI to “speed things up”? There’s something understandable about that.

Of course, understanding doesn’t translate to acceptance. Even if you don’t have a moral issue with use of generative AI in this project, it’s a gamble. The MegaMek team are betting that no future legal ruling will create problems in the future, despite indications that such rulings are coming. And they’re betting the work of real people on this, because there are still volunteer developers putting their time into MegaMek code that depends on AI-generated content.

What Now?

There’s a tradition with this sort of piece to find some other group and declare, “it’s those people I really feel bad for”. Well, with all due respect to tradition and anyone more affected – I haven’t gotten to that point. Right now, I’m stuck looking at plans and materials I had for maybe doing an old-fashioned “After Action Report” series using MegaMek and realizing that they’re fated for nothing more glorious than the recycle bin.

Still, this piece wasn’t written just for me. Look, I don’t know where this news puts you. I certainly don’t know what you should do about it – it’s probably one of those things that everyone has to find their own answer on. But I know some people are going to be making decisions for bigger communities. The excellent folks at the MechCommander Review Circuit have been running organized play events online using MegaMek and (to the best of my knowledge) they had nothing to do with the decision to start using generative AI in the application’s development. There are YouTubers and streamers putting out MegaMek-based content, too, and a lot of them are going to be left with hard decisions. If you’re in that boat, I don’t have anything as substantial as “advice” – just some closing observations.

In the short term, it looks to me like the last version of the full MegaMek suite that didn’t use AI-created code was the 0.50.06 milestone release from May 2025 – so if you need a MegaMek but don’t want AI, that’s probably the place to go. In the long run, it’s pretty likely that some other solution will arrive. Maybe the MegaMek team will correct course. Maybe another team will “fork” from the project on an anti-AI basis. Or maybe another group of people who love BattleTech and programming will start an entirely different project.

Thanks for reading all the way to the end. If you need a palate-cleanser after that, there’s a fairly new TOP SECRET article for supporters. If you’re signed up through Substack (or just want to see the preview), check out that version of My Name is Katrina. There’s also a version on Ko-Fi for folks who prefer to support through that platform.

I'm sorry this is just dumb. I think there are battles to fight on AI use, but code for an open source fan project is the very worst one to pick.

Please see our official response - https://megamek.org/2026/01/18/Addressing-AI-Tools-in-MegaMek-Development.html